Introduction to HTTP

The Hypertext Transfer Protocol (HTTP) is the foundation of any data exchange on the Web and a protocol used for transmitting hypertext requests and information between servers and browsers. HTTP is an application layer protocol designed within the framework of the Internet protocol suite. It enables web browsers to retrieve resources, such as HTML documents, images, and videos, from web servers. Over the years, HTTP has evolved to meet the demands of the ever-expanding and increasingly complex web.

HTTP/1.0 and HTTP/1.1

HTTP/1.0

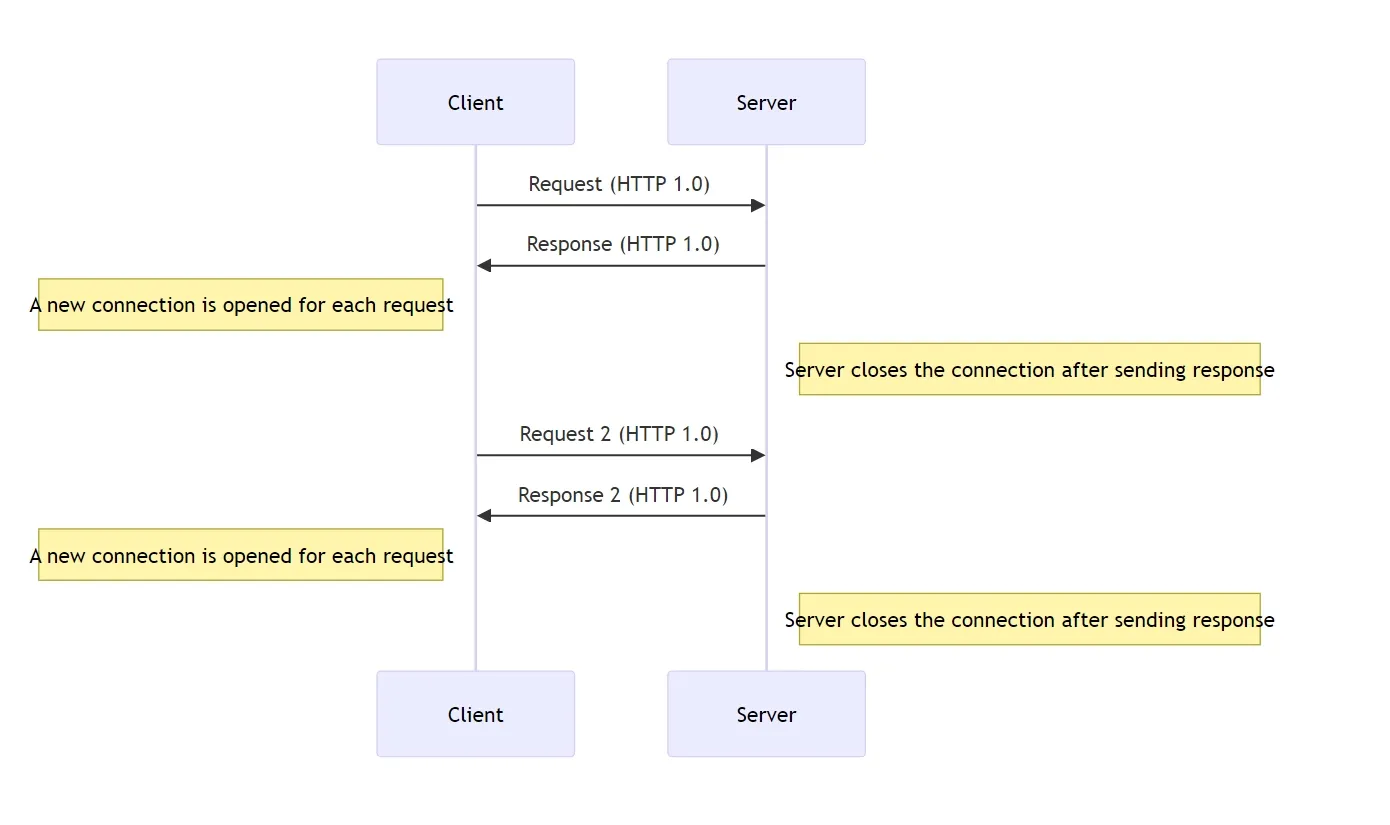

Click Here For Explanation Of Above Diagram (working of http1)

The key points shown in the diagram are:

-

A new connection is opened for each request: For each request the client sends (HTTP 1.0), a new TCP connection is established between the client and server.

-

Server closes the connection after sending the response: After sending the response (HTTP 1.0) to the client’s request, the server immediately closes the connection.

This process repeats for subsequent requests, where a new connection is opened, the request is sent, the response is received, and the connection is closed again.

The lack of persistent connections in HTTP/1.0 results in inefficiencies and increased overhead, as a new TCP connection needs to be established for every request-response cycle. This can lead to higher latency and slower performance, especially for websites or applications that require multiple resources to be loaded.

The introduction of persistent connections in HTTP/1.1 aimed to address this issue by allowing a single TCP connection to be reused for multiple requests and responses, reducing the overhead of opening and closing connections for each request.

HTTP/1.0 was officially introduced in 1996 as the first version to be widely adopted and standardized. Some key features of HTTP/1.0 include:

- Simple Request/Response Model: HTTP/1.0 uses a straightforward request/response approach where the client sends a request to the server, and the server sends back the requested data.

- Stateless Protocol: Each request from a client to a server is treated as an independent transaction that is unrelated to any previous request. This statelessness simplifies the protocol but requires each request to carry all necessary information, leading to inefficiency.

- Text-Based Communication: Requests and responses are communicated as plain text, making them easy to construct and debug.

- Limited Persistent Connections: By default, HTTP/1.0 closes the TCP connection after each request/response cycle. This behavior introduces significant overhead due to the cost of setting up and tearing down connections.

HTTP/1.1

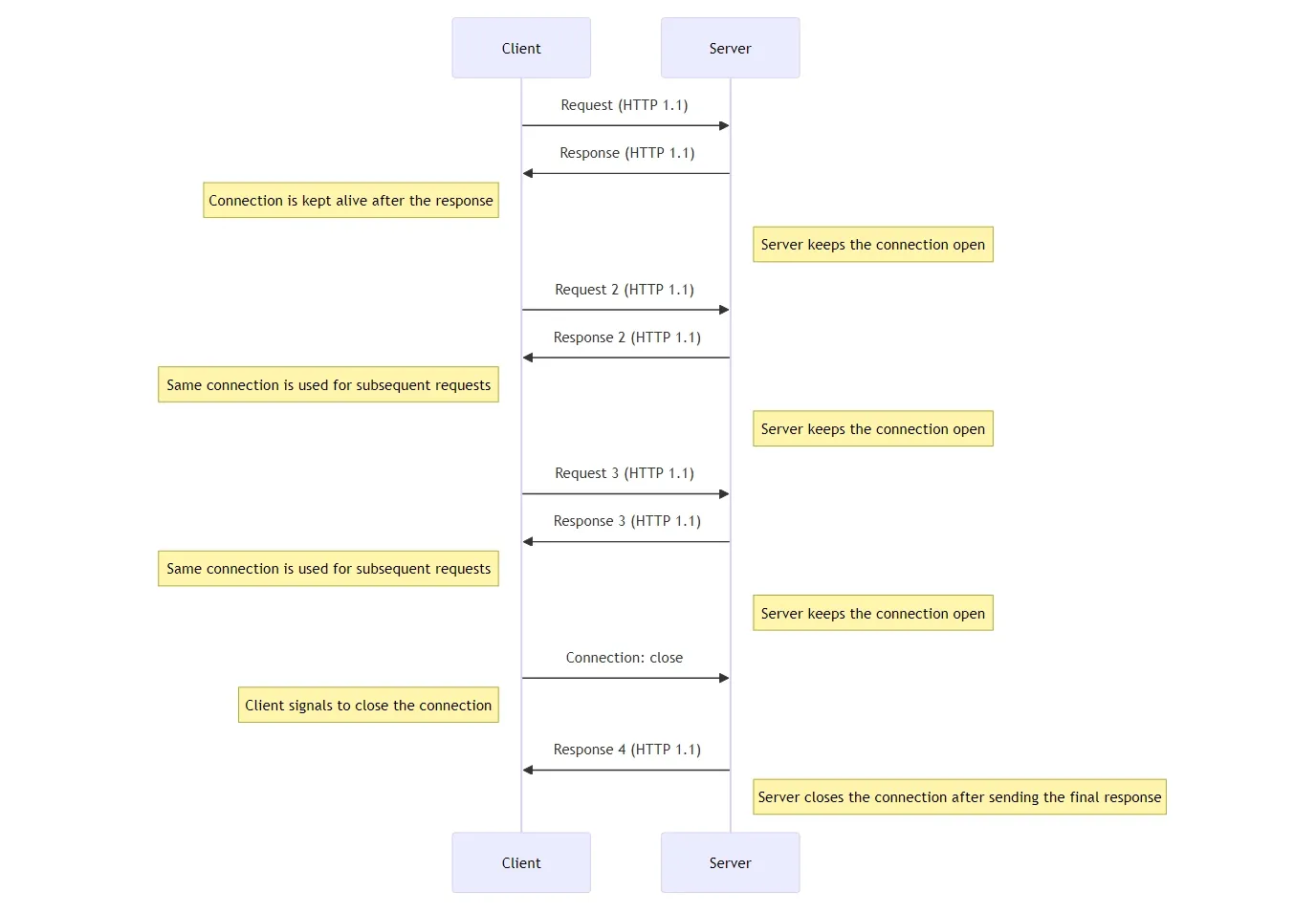

Click Here For Explanation Of Above Diagram (working of http1.1)

This diagram illustrates how HTTP/1.1 works in terms of connection management between a client and a server.

The key points shown are:

-

Connection is kept alive after the response: After the server sends the initial response (HTTP 1.1) to the client’s request, the connection is kept alive instead of being closed immediately.

-

Server keeps the connection open: The server keeps the same connection open and does not close it after sending the response.

-

Same connection is used for subsequent requests: The client and server can send additional requests/responses over the same established connection, instead of creating a new connection for each request/response cycle.

-

Client signals to close the connection: When the client is done with its requests, it sends a “Connection: close” message to the server, signaling that the connection should be closed.

-

Server closes the connection after sending the final response: After receiving the “Connection: close” signal from the client, the server sends the final response (HTTP 1.1) and then closes the connection.

The main advantage of this approach in HTTP/1.1 is that it allows for persistent connections, where a single TCP connection can be reused for multiple HTTP requests and responses, reducing the overhead of establishing new connections for every request. This improves performance and efficiency compared to the non-persistent connections used in earlier versions of HTTP.

HTTP/1.1, released in 1997, addressed many limitations of HTTP/1.0 and introduced several enhancements:

- Persistent Connections: One of the most significant improvements in HTTP/1.1 is the use of persistent connections, where a single TCP connection can be reused for multiple requests and responses. This reduces latency and the overhead of establishing multiple connections.

- Chunked Transfer Encoding: HTTP/1.1 introduced chunked transfer encoding, allowing a server to start sending a response before knowing its total size, which is beneficial for dynamically generated content.

- More Efficient Caching: HTTP/1.1 includes more sophisticated caching mechanisms, such as the Cache-Control header, which provides fine-grained control over caching policies.

- Additional Methods and Status Codes: HTTP/1.1 expanded the range of HTTP methods (e.g., OPTIONS, PUT, DELETE) and status codes, providing more tools for developers to handle different types of requests and responses.

- Host Header: The Host header allows multiple domains to be hosted on a single IP address, a critical feature for the expansion of the web.

HTTP/2: A Major Overhaul

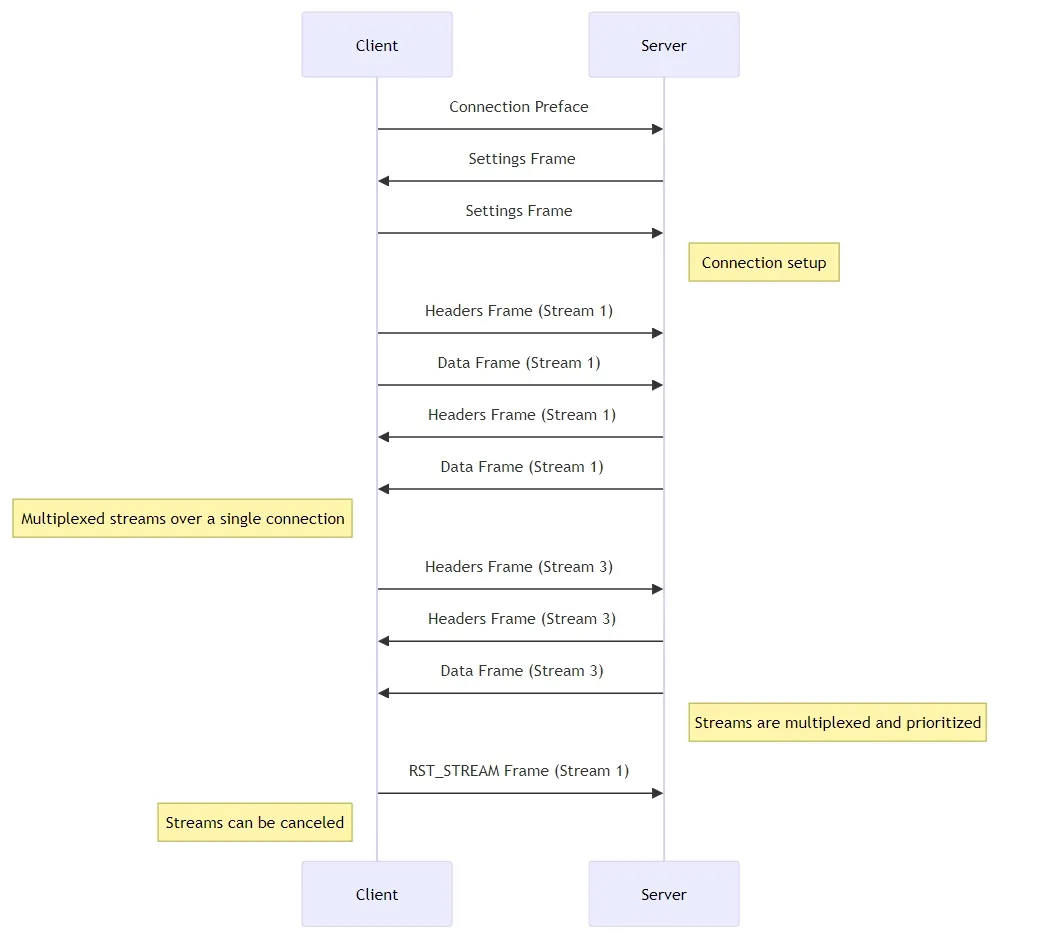

Click Here For Explanation Of Above Diagram (working of http2)

This diagram illustrates the communication between a client and a server using the QUIC protocol, which allows for multiplexing of streams over a single connection.

The key steps shown in the diagram are:

-

Connection setup:

- The client sends a Connection Preface to initiate the connection.

- The client and server exchange Settings Frames to negotiate connection parameters.

-

Multiplexed streams over a single connection:

- After the connection is established, the client and server can send data frames belonging to different streams (e.g., Stream 1 and Stream 3) over the same connection.

- Headers Frames and Data Frames for each stream are interleaved and transmitted between the client and server.

-

Streams are multiplexed and prioritized:

- The streams are multiplexed together, allowing for efficient resource utilization and prioritization of streams.

-

Streams can be canceled:

- An individual stream (in this case, Stream 1) can be canceled or reset using the RST_STREAM Frame without affecting the entire connection or other streams.

HTTP/2, standardized in 2015, brought significant improvements over HTTP/1.1, addressing performance bottlenecks and inefficiencies. Key features of HTTP/2 include:

- Binary Protocol: Unlike the text-based HTTP/1.x, HTTP/2 uses a binary framing layer, which is more efficient to parse and less prone to errors.

- Multiplexing: HTTP/2 allows multiple requests and responses to be sent concurrently over a single connection, eliminating the head-of-line blocking problem in HTTP/1.x where one request could block others.

- Header Compression: HTTP/2 introduces HPACK, a header compression algorithm that reduces the overhead caused by repetitive header data, improving performance.

- Server Push: HTTP/2 allows servers to push resources proactively to the client before the client explicitly requests them, reducing latency and improving page load times.

- Stream Prioritization: Clients can prioritize streams, allowing more important resources to be delivered first, which enhances the user experience.

HTTP/3: The Next Generation

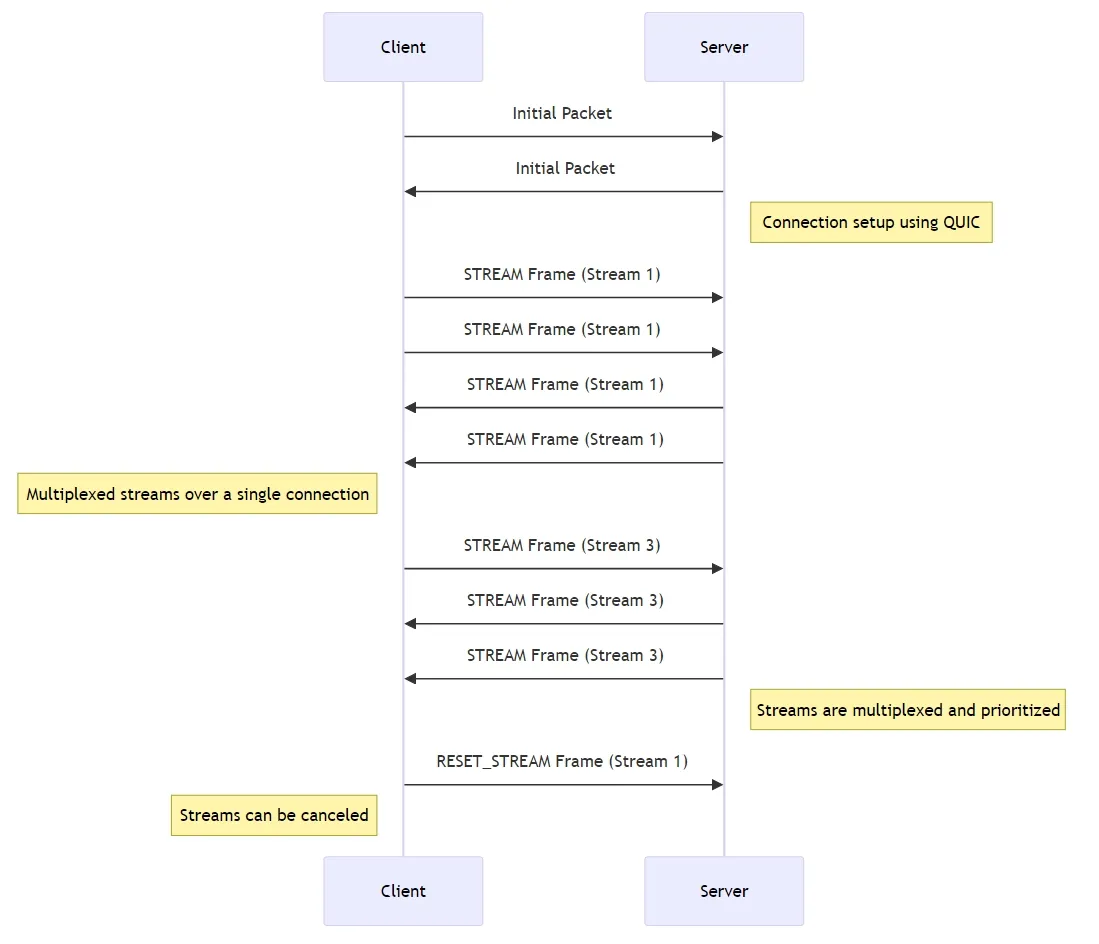

Click Here For Explanation Of Above Diagram (working of http3)

This diagram illustrates the process of multiplexed streams over a single connection using the QUIC protocol between a client and a server.

The key points shown in the diagram are:

-

Connection setup using QUIC: The initial handshake packets are exchanged between the client and server to establish a QUIC connection.

-

Multiplexed streams over a single connection: Once the connection is set up, multiple streams (Stream 1 and Stream 3) can be transmitted over the same connection. Frames from these streams are interleaved and sent back and forth between the client and server.

-

Streams are multiplexed and prioritized: The different streams are multiplexed together, allowing for prioritization and efficient resource utilization.

-

Streams can be canceled: As shown by the RESET_STREAM Frame for Stream 1, individual streams can be canceled or reset independently without affecting the entire connection.

HTTP/3, currently in the process of standardization, is designed to address the limitations of HTTP/2, particularly related to the transport layer. HTTP/3 is based on the QUIC protocol, developed by Google, which operates over UDP rather than TCP. Key features of HTTP/3 include:

- QUIC Protocol: By using QUIC, HTTP/3 benefits from features such as faster connection establishment (0-RTT and 1-RTT handshakes), improved congestion control, and multiplexing without head-of-line blocking at the transport layer.

- Improved Security: QUIC integrates TLS 1.3, providing enhanced security and privacy protections.

- Connection Migration: QUIC supports connection migration, allowing a session to continue seamlessly if the client’s IP address changes, such as when switching from Wi-Fi to mobile data.

- Reduced Latency: By operating over UDP and using features like 0-RTT, HTTP/3 can reduce latency and improve page load times, especially in environments with high packet loss or latency.

The following table provides a clear comparison of how each version of HTTP has evolved to address the limitations of its predecessors and improve the efficiency, performance, and security of web communications.

| Feature | HTTP/1.0 | HTTP/1.1 | HTTP/2 | HTTP/3 |

|---|---|---|---|---|

| Introduction Year | 1996 | 1997 | 2015 | Ongoing Standardization (QUIC-based) |

| Connection Management | Closes connection after each request | Persistent connections | Multiplexing over a single connection | Multiplexing over a single connection |

| Protocol Type | Text-based | Text-based | Binary framing | Binary framing over QUIC (UDP) |

| Header Compression | No | No | HPACK compression | QPACK compression |

| Multiplexing | No | No | Yes | Yes |

| Server Push | No | No | Yes | Yes |

| Connection Establishment | Slow, due to frequent connection setup | Improved with persistent connections | Faster with single connection | Fastest with 0-RTT and 1-RTT |

| Head-of-Line Blocking | Yes | Yes | Mitigated by multiplexing | Eliminated by QUIC |

| Security | Uses separate TLS | Uses separate TLS | Uses separate TLS | Integrated with TLS 1.3 |

| Transport Layer | TCP | TCP | TCP | UDP (QUIC) |

| Request Pipelining | No | Limited (often problematic) | Yes, with multiplexing | Yes, with multiplexing |

| Connection Migration | No | No | No | Yes |

Conclusion

HTTP has come a long way since its inception, evolving from the simple and inefficient HTTP/1.0 to the advanced and highly efficient HTTP/3. Each version has built upon the strengths and addressed the weaknesses of its predecessors, ensuring that the protocol remains robust and capable of meeting the demands of modern web applications. As the web continues to grow and evolve, HTTP will undoubtedly continue to develop, providing a solid foundation for future innovations.